Promotion: Use code PERFBLOG to save 10% off my course. Module 1 is free.

While it’s easy to make reports in Power BI, it can be a pain to troubleshoot and tune them. Properly performance tuning Power BI reports requires identifying the bottleneck and using a handful of external applications. This article covers how to narrow down the performance problem, as well as general best practices. Once you understand the fundamentals it gets a lot easier.

Which part is slow?

As a report developer, it can be frustrating a report developer, knowing that something is slow, but not being able to put your finger on it. In my mind, there are 4 main areas where there might be a slowdown:

- Data refresh

- Model calculations

- Visualization rendering

- Everything else

Identifying which one of these is the problem is the first step to improving performance. In most cases, if a report is slow it’s an issue with step 2, your data model.

Tuning the data refresh

The refresh is rarely the problem because the user never sees the refresh process if you are using Import mode. So usually you are going to see a slow refresh when you are authoring the report or if a scheduled refresh fails. Still, it’s important to tune your data refresh to avoid timeouts and minimize how much data your are loading.

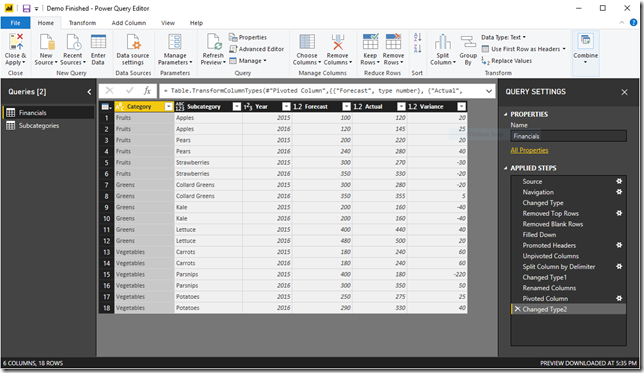

Verify that query folding is working

If you are querying a relational database, especially SQL Server, then you want to make sure that query folding is being applied. Query folding is when M code in PowerQuery is pushed down to the source system, often via a SQL query. One simple way to confirm that query folding is working is to right click on a step sand select View Native Query. This will show you the SQL query that will be run against the database. If you have admin privileges on the server, you can also use extended events to monitor the server for queries. If you are doing this, you should limited the monitoring to the Data Mashup engine, which is the application name for Power Query.

Some transformation steps can break query folding, making any steps after them unfoldable. Finding out which steps break folding is a matter of trial and error. But simple transformations, such as filtering rows and removing columns, should be applied as early

Minimize the data you are loading

If you don’t need certain columns, then remove them. If you don’t need certain rows of data, then filter them out. This can improve performance when refreshing the data AND when modelling it later on, which is a win-win.

If your Power BI file is more than 100MB, there is a good chance you are going to see a slowdown due to the data size. Once it gets bigger than that it is important to either work on your DAX code, or look into an alternative querying/hosting method such as DirectQuery or Power BI Premium.

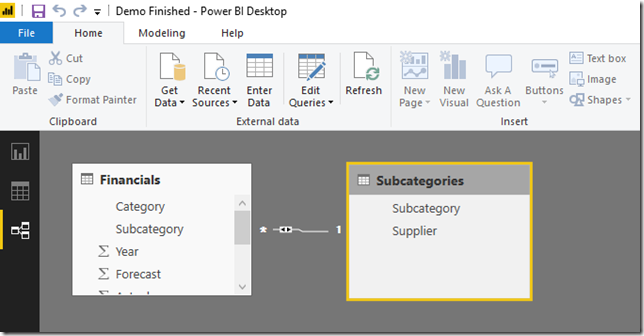

Consider performing joins in DAX, not in M

If you need to establish a relationship purely for filtering reasons, such as using a dimension table, then consider creating the relationship in DAX instead of in PowerQuery. DAX is blazing fast at applying filters, whereas Power Query can be very slow at applying a join, especially if that join is not being folded down to the SQL level. That being said, sometimes the data makes more sense as a flattened table for usability purposes.

Review your applied steps

Because Power Query is such a graphical tool, it can be easy to make changes and then forget about them. I had one customer who would often sort his data and then accidently leave the step in there. This was terrible for performance.

Make use of SQL indexes

If your data is in a relational database, then you want to make sure there are indexes to support your queries. If you are using just a few columns it may be possible to create a covering query that covers all of the columns you need. Work with your DBA to see what your options are. If you are using SQL Server, you can use execution plans to identify if the query is using an index. You will need the SHOW_PLAN permission on the server and you will need to use the View Native Query functionally to get the underlying SQL query

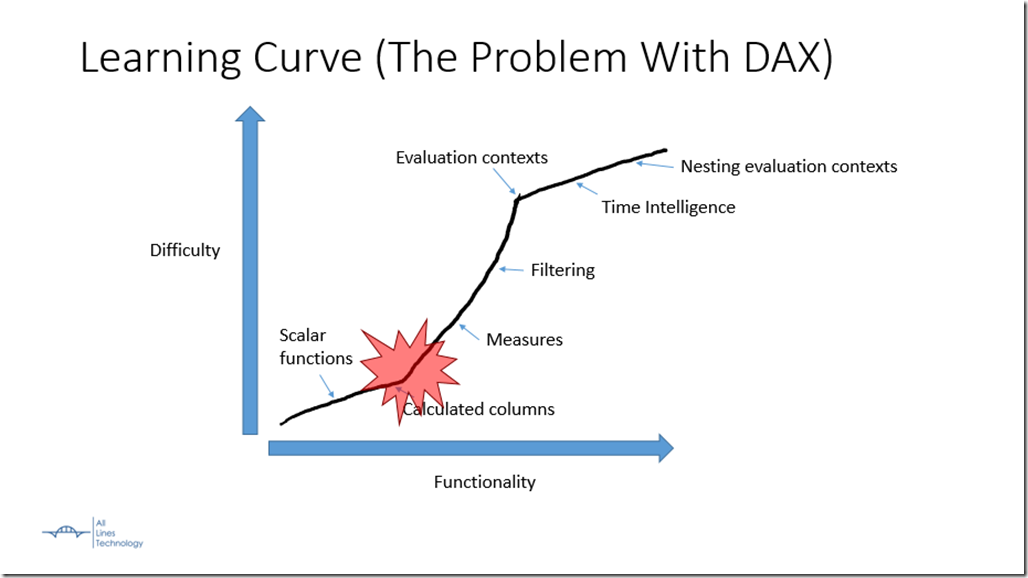

Tuning the model calculations

When someone says that a Power BI report is slow, it is usually an issue with the DAX modelling. Unfortunately, that fact isn’t obvious to the user and it can look like the visuals themselves are slow. Thankfully, there is a tool to identify the difference: the Power BI Performance Analyzer.

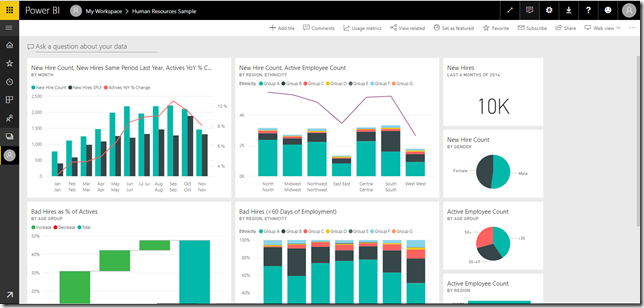

Use the Power BI Performance Analyzer

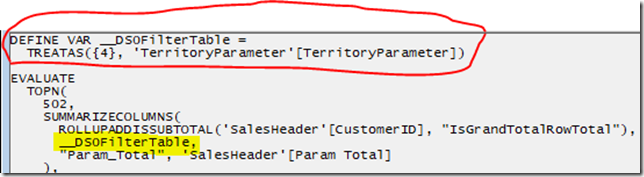

If your report is slow, the very first thing you should do is run the Power BI performance analyzer. This will give you detailed measurements of which visuals are slow as well as how much of that time is spent running DAX and how much is spent rendering the visual. Additionally, this tool gives you the actual DAX code being run behinds the scenes, which you can run manually with DAX Studio. When I am dealing with a tricky performance problem, this is my go to tool.

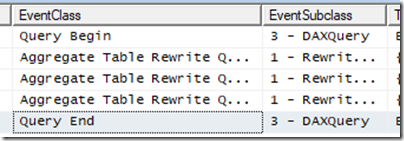

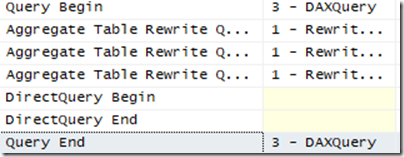

Confirm that the storage engine is being used

When using DAX studio, you can see how much time is spent with the storage engine versus the formula engine. The storage engine can only handle simple operations, but is multi-threaded and very fast. Whenever possible, it’s better to make use of the storage engine.

Remove data you don’t need

Because of the way the data is stored in Power BI, the more columns you have the worse compression and performance you have. Additionally, unnecessary rows can slow things down as well. Two years of data is almost always going to be faster than 10 years of the same data.

Additionally, avoid columns with a lot of unique values such as primary keys. The more repeated values in a column, the better the compression because of run-length encoding. Unique columns can actually take up more space when encoded for Power BI than the source data did.

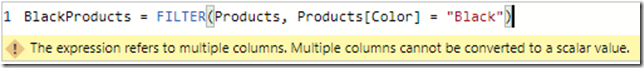

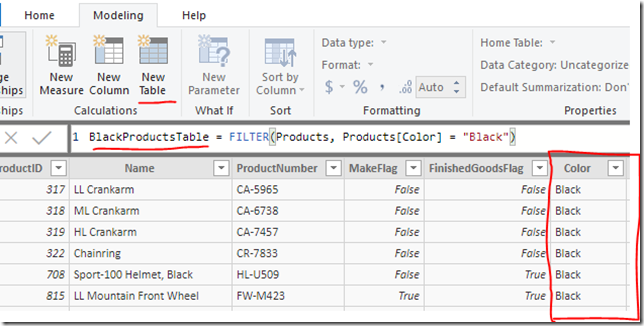

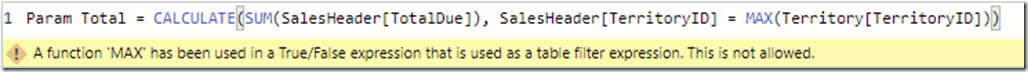

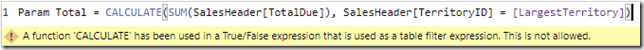

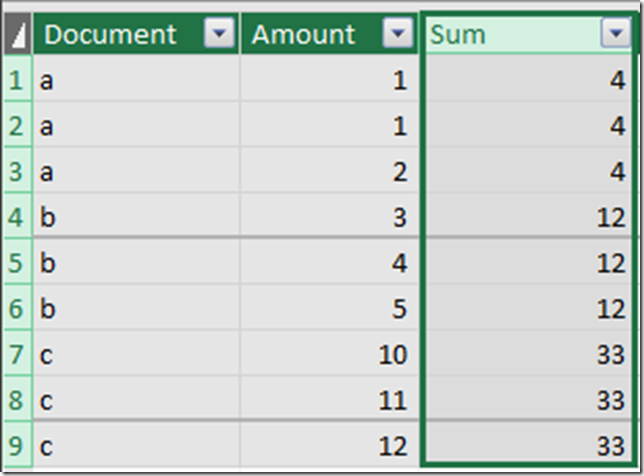

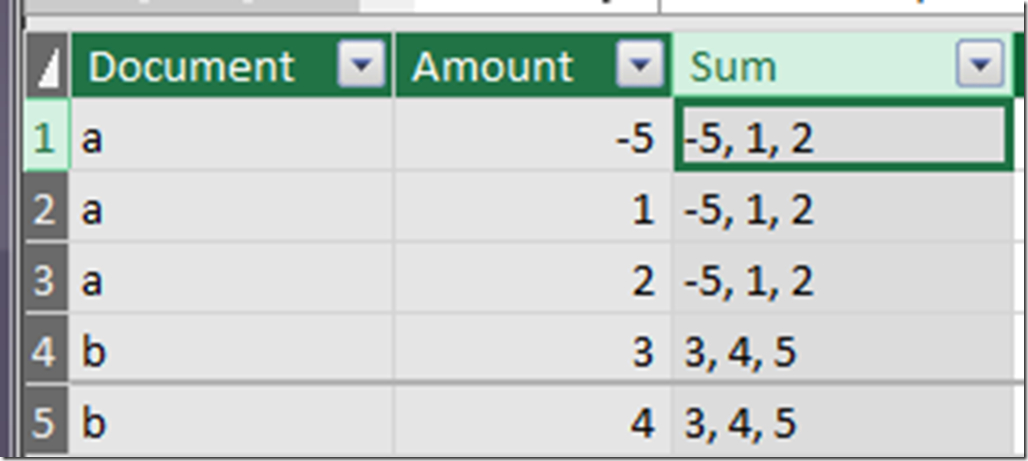

Avoid iterator functions

Iterator functions will calculate a result row by agonizing row, which is not ideal for a columnar data store like DAX. There are two ways to identify iterator functions. The aggregation functions generally end in an X: SUMX, MAXX, CONCATENATEX, etc. Additionally, many iterators take in a table as the first parameter and then an expression as the second parameter. Iterators with simple logic are generally fine, and sometimes are secretly converted to more efficient forms.

Use a star schema

Using a star schema, a transaction table in the center surrounded by lookup tables, has a number of benefits. It encourages filtering based on the lookup tables and aggregating based on the transaction table. The two things DAX is best at is filtering and aggregating. A star schema also keeps the relationships simple and easy to understand.

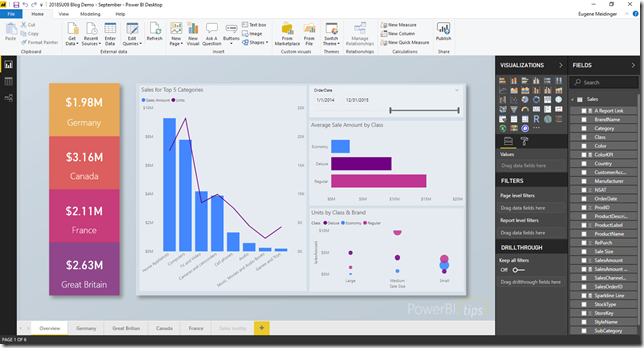

Visualization Rendering

Sometimes the issue isn’t necessarily the data model but the visuals. I’ve seen this when a user tries to put >20 different things on a page or has a table with thousands of rows.

Lean towards aggregation

The DAX engine, Vertipaq, is really good at two things: filtering and aggregations. This means it’s ideal for high level reporting like KPIs and traditional dashboards. This also means it is not good at very detail-heavy and granular reporting. If you have a table with 10,000 rows and complex measures being calculated for each row, it’s going to be really slow. If you need to be able to show detailed information, take advantage of drill-though pages or report tooltips to pre-filter the data that is being shown.

Filter what is being shown

Unless you are using simple aggregations, it’s not advisable to show all of the data at once. One way to deal with this is to apply report or page filters to limit how many rows are being rendered at any one time. Another options is to use drill-through pages and report tool-tips to implicitly filter the data being shown.

Limit how many visualizations are on screen. The part of Power BI that renders the visualization is single-threaded and can be slow sometimes. Whenever possible, try to not have more than 20 elements on screen. Even simple lines and boxes can slow down rendering a little bit.

Everything else

Review your query method. This article assumes that you are using the import method to pull in your data. However, DirectQuery or live connections might be much faster depending on the size of your data.

Sometimes a report can seem slow when you are developing it. Make sure that there aren’t any applications on your PC that are consuming all of your resources and making Power BI Desktop seem slow.