Last week I struggled to load and process the data. I was frustrated and a good bit disoriented. This week has been mostly backing up (again) and getting a better idea of what’s going on.

Understanding Databricks is core to understanding Fabric

One of the things that helps to understand Fabric is that it’s heavily influenced by Databricks. It’s built on delta lake, which is created and open sourced by Databricks 2019. You are encouraged to use a medallion architecture, which as far as I can tell, comes from Databricks.

You will be a lot less frustrated if you realize that much of what’s going on with Fabric is a blend of open source formats and protocols, but also is a combination of the idiosyncrasies of Databricks and then those of Microsoft. David Gomes has good post about data lake file formats, and it’s interesting to imagine the parallel universe where Fabric is built on Iceberg (which is also based on Parquet files) instead of delta lake. (Note, I found this post from this week’s issue of Brent Ozar’s Newsletter)

It was honestly a bit refreshing to see Marco Russo, DAX expert, a bit befuddled on Twitter and LinkedIn about how wishy-washy medallion architecture is. This was reaffirmed by Simon Whitely’s recent video.

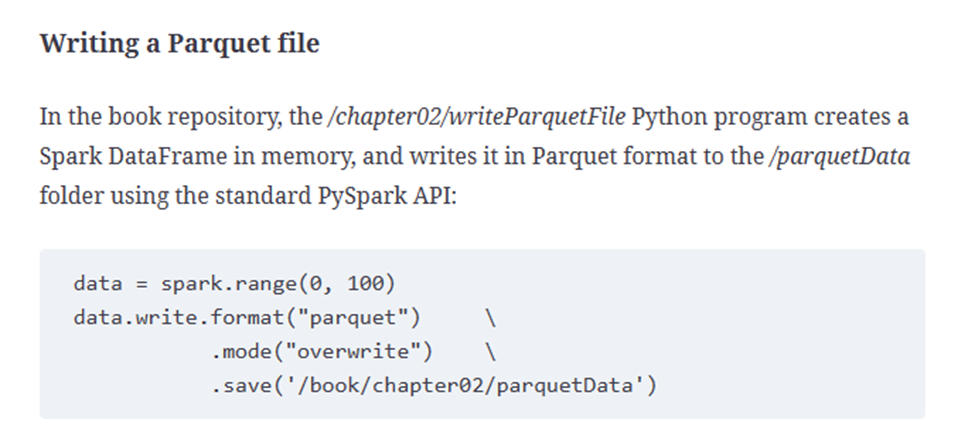

This also means that the best place to learn about these is Databricks itself. I’ve been skimming through Delta Lake: Up & Running and finding it helpful. It looks like you can also download it for free if you don’t mind a sales call.

What should I use for ETL?

After playing around some more, I think the best approach right now is to work with notebooks for all of my data transformation. So far I see a couple of benefits. First, it’s easier to put the code into source control, at least in theory. In practice, a notebook files is actually a big ol’ JSON file, so the commits may look a bit ugly.

Second, it’s easier from a from a “I’m completely lost” perspective, because it’s easier to step through individual steps, see the results, etc. This is especially true when Delta Lake: Up & Running has exercises in PySpark. I’d prefer to work with dataflows because that’s what I’m comfortable with, but clearly that hasn’t worked for me so far.

Tomaž Kaštrun has a blog series on getting into fabric which shows how easy it is to create a PySpark notebook. I am a bit frustrated that I didn’t realize notebooks were a valid ETL tool, I always thought of them being for data science experiments. Microsoft has some terse documentation that covers some of the options for getting data into your Lakehouse. I hope they continue to expand it like they have done with the Power BI guidance.

Leave a Reply