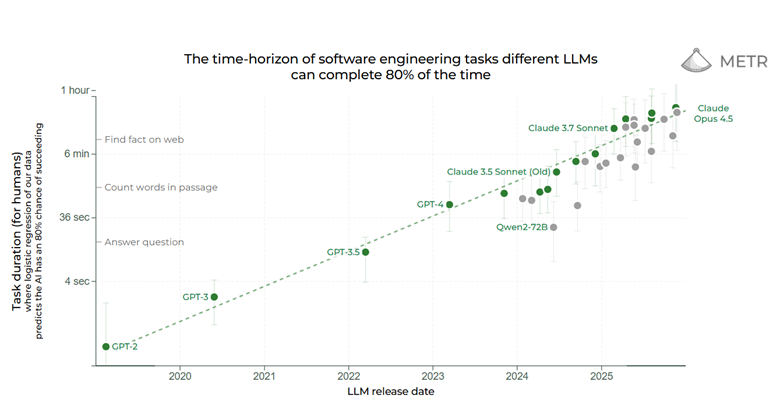

In this post, I will assume 1) you have no experience with LLMs at all and 2) you think the idea of AI writing 80-90% of our code in the future is complete poppycock. Personally, I feel….less certain about the future, but I agree the claims of AI CEOs sound like total BS.

First we’ll define vibe engineering, then I’ll give you the 6 rules for surviving the LLM Casino.

What is vibe coding and vibe engineering?

Andrej Karpathy had a long definition on Twitter of vibe coding that in many ways has been misconstrued to mean all sorts of things, like one-shotting from prompt to final product, or writing full software but never looking at the code. Here is my earnest definition:

Vibe coding is when you enter an addictive flow state with an LLM slot machine. You test the software, provide natural language feedback, and may occasionally look at code, but you NEVER write a single line of code.

As you can imagine, vibe coding is FUN, but in the same way that going to Vegas is fun. And in the same way, both are dangerous. Sometimes you get a slick looking website to show your Nordic friends, sometimes your MSPaint alternate history clone looks like crap, sometimes you delete prod. Fun!

Vibe engineering is a little bit closer to going to Vegas and card counting or going to Vegas but with strict rules. When me and my husband went for Fabcon, we did 5-dollar blackjack. We both have addictive personalities and I’ve never properly gambled before, so we needed a plan and we needed to hold each other accountable.

We agreed in advance we wouldn’t spend more than an allocated amount. We were having so much fun we changed that to allocated amount + winnings. Well, so much for willpower. Ahead of time, we also agreed that we were okay if we lost all our starting money. This was a $75 entertainment expense and not a bet. Cheaper than our Penn and Teller tickets, but not as cool either.

So, we entered the den of vice with $75 between us. I ended up wasting $15 on roulette because I’m stupid and didn’t understand I could cash the roulette chips back out to regular chips? Did I mention this was my first time in a casino?

Miles did really well, so between the two of us we left with $10 more than we started. Overall, we had a wonderful, wonderful time. We got to socialize with some vacationers. The dealer had to follow robotic instructions for how she played but was free to give us helpful tips on when to hit and how to beat her. Just purely delightful.

Also, whenever I see slot machines now, I feel sick to my stomach about all the dark UX patterns. They don’t look like they did in the movies anymore. They are just giant wraparound screens with bright lights and sounds. Horrific iPads warped by Mammon.

Slot machines are the ultimate skinner box designed to manipulate and abuse you. Utterly disgusting🤢. Remember, some casino games are more dangerous than others, so pick your poison wisely.

Anyway, this is vibe engineering. Play stupid games, get stupid prizes, and have a plan along the way. Ready to start?

The 6 rules in the LLM casino

1. The house always wins and LLMs always screw up

In gambling, the house always wins in the long term. Maybe not if you can count cards. Maybe not if you are lucky. But in general, assume you are going to lose your shirt. Don’t bet more than you are willing to lose.

In the same way, assume the LLM will screw up eventually. For example, maybe it will delete your prod database. Maybe it will cause an AWS outage.

Accordingly, take as many steps as possible to reduce the negative consequences of screw-ups. Use Git and commit frequently. Consider using a sandbox or docker container. Implement linting, type checking, and CI/CD.

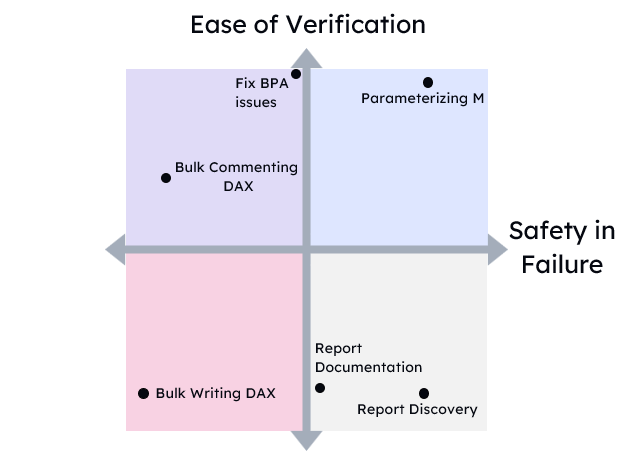

Additionally, only bet what you are willing to lose. Start with side projects. Start with tasks where if the code is completely wrong, nothing is hurt. Do tasks that are easily verifiable and have a low risk of subtle errors.

2.Pick the games with the best returns

Different casino games have different odds. Blackjack is one of the best games to play if you want to lose your money slowly. Keno and roulette are the worst (whoops!). So how do you pick games with the best returns for LLMs?

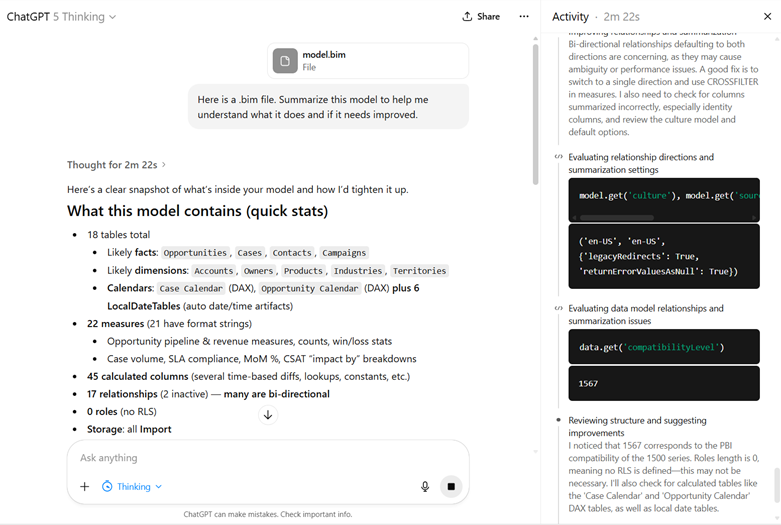

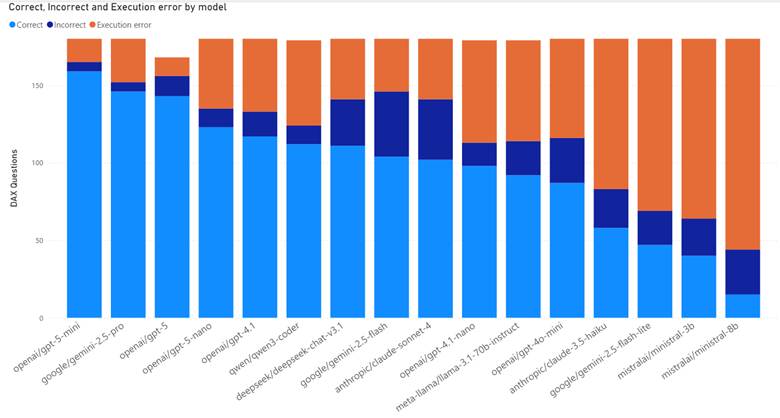

LLMs are pre-trained on massive, massive internet-sized amounts of data. So, to a first approximation, languages that have a large number of Github repos like Python and Typescript will do better.

Modern “reasoning” LLMs are trained on Reinforcement Learning with Verifiable Rewards. These are math and coding tasks where there is a known correct answer, and the models can be rewarded for getting complete or partial credit towards those solutions. So, to a second approximation, you want languages and problems where there are clear and correct solutions.

In an interesting study, one of the best languages for LLMs is Elixir, in part because of the fact that there is often a single right way to do things in that language, unlike say PowerShell.

3. Understand your poker chips

In a casino, you often bet with poker chips. In the LLM casino you have two types of chips: tokens and time.

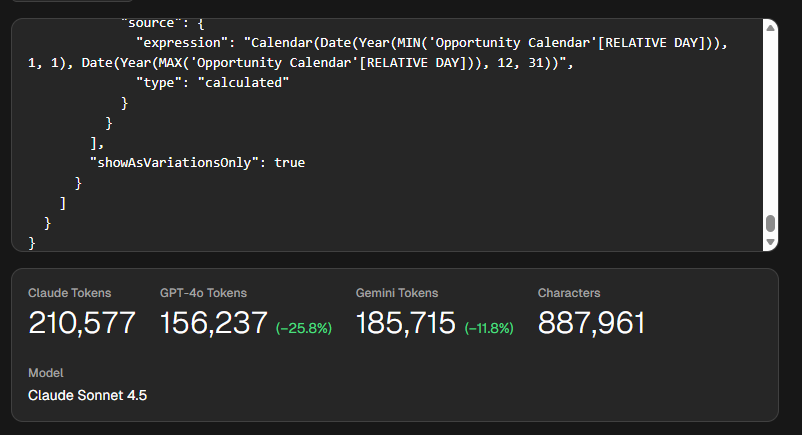

Tokens are subword chunks that are how LLMs think, speak, everything. Model provider charge in tokens with different rates for input tokens, output tokens, and cached prompt tokens. When it comes to cost, this will be the number one thing to think about. Imagine if when you send a text message you got charged 1 cent per word. It would affect how you wrote!

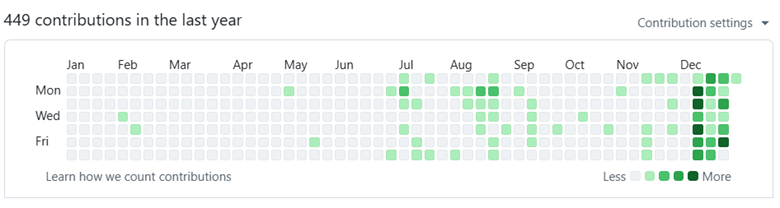

The other thing that’s easy to blow in LLM Vegas, where there are no clocks or windows, is time. Time building the wrong thing. Time waiting for the AI. Time cleaning it up. One study found experienced devs in complex repos wasted 20% of their time trying out Cursor when they thought it was making them faster. Another study found junior devs were not significantly faster for basic tasks in an unfamiliar library.

And what do these games pay out? Well in lines of code of course. And tech bros will brag about 10k lines of code per day. But I think the Dijkstra quote here is relevant here.

My point today is that, if we wish to count lines of code, we should not regard them as ‘lines produced’ but as ‘lines spent’: the current conventional wisdom is so foolish as to book that count on the wrong side of the ledger.

Remember, the house always wins and LLMs always screw up.

4. Go in with a plan

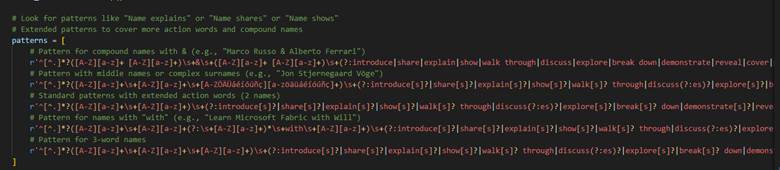

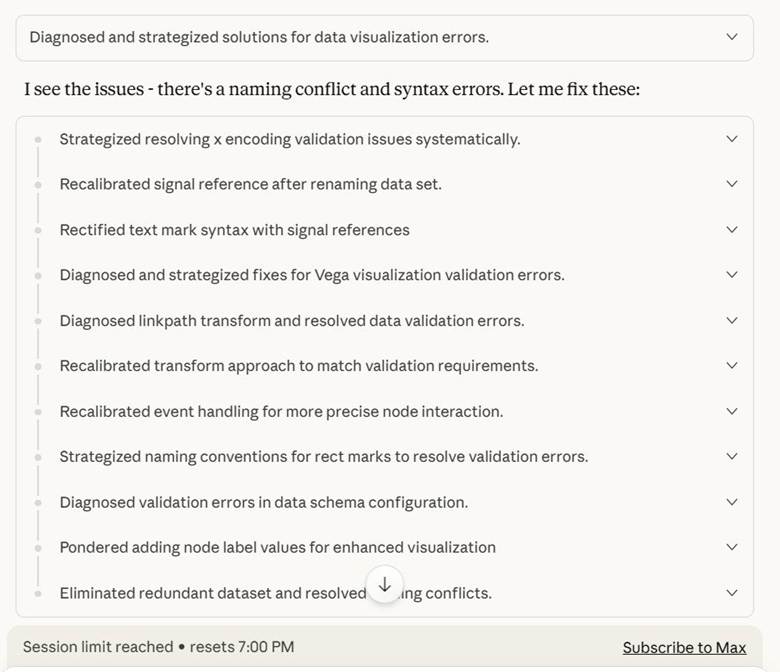

It is fun, and entertaining, to see if Claude can recreate sand physics games from a single prompt. But for anything that you actually care about, you should build out a plan. LLMs “think” as they type, one token at a time, so if you can get them to do the thinking before the building, they perform better.

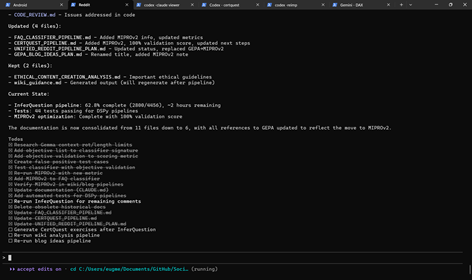

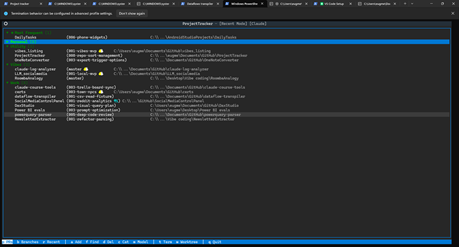

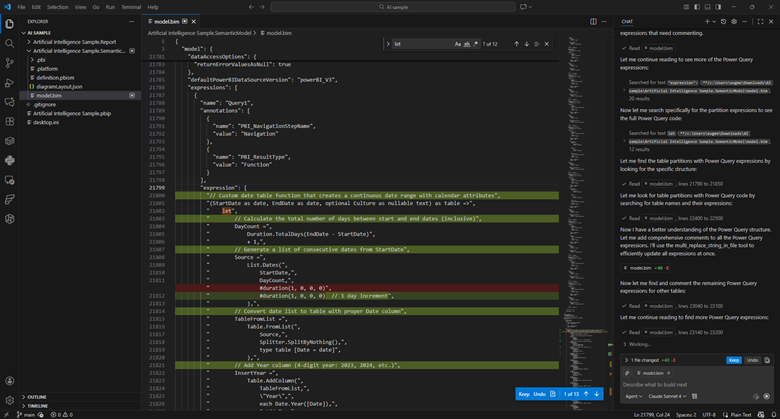

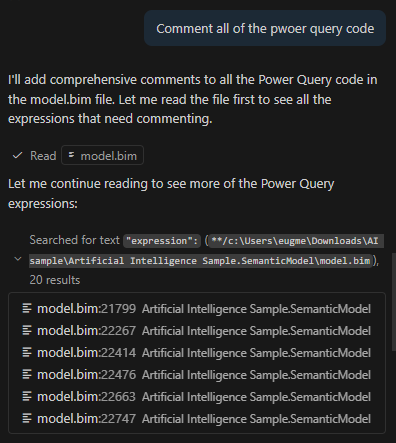

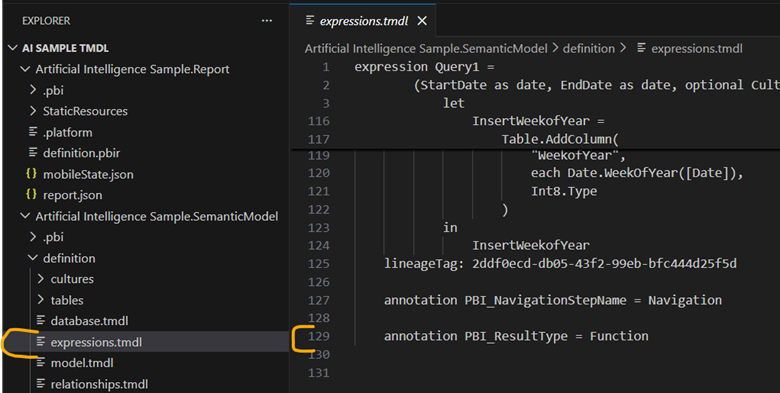

Claude Code has a planning mode, which you should use frequently. I personally like SpecKit if I need more intensive planning and scoping. That said, I think most Spec-driven-development is just playing Barbie Dreamhouse but with LLMs as the Barbies and scrum personas as the outfits, but I find a little bit of it useful sometimes.

But in general, don’t just “YOLO” your way through this. Don’t just give it a single prompt and walk away. Take the time to define success criteria, failure criteria, and the sorts of automated tests you want.

5. Don’t get distracted by shiny lights

Vegas is a sensory overload, and as an introvert, I hate it. Noises, sounds, the brightest colors you’ve ever seen, free drinks at the blackjack table. Vegas is designed to overstimulate you and keep you distracted. When I got there, I decided it was an ideal vacation for three sorts of people: the rich, kids in their 20s, drunks. Ideally all 3.

Your coding agent is also subject to distraction. It is your job to created automated ways for your computer to slap it on the wrist and say “hey, stop that.”. Some people refer to this as backpressure.

AI agents do best when they can sort of bumble around like a dumb Roomba, and bump into walls until they find their way to the solution. How can you put up those walls to help it?

Use a typed or compiled language. Use linters. Use language service protocols (think IDE tools). Use automated tests. Use end to end tests and tools like Playwright. Use git pre-commit and pre-push hooks.

Anything and everything so when your LLM is dumb like me and wants to play roulette you have an automated way to go “ah, ah, ah! no, no, no!”.

6. Get in and get out

One of the keys to getting good results from an LLM is to provide it with just enough information and nothing more. This is measured by the “context window” which is the sum total of tokens an LLM can see at once. This includes the system prompt, the user prompt, any MCP servers, and the chat history. Context rot is the idea that the more of that window is filled the more “confused” the LLM will get.

Get in, get out, clear the context window frequently. If you are letting it run for one long session and it performs multiple compactions, which is where it tries to summarize the previous session and continue working. It’s known that quality quickly degrades after multiple compactions.