Microsoft has released a Power BI modeling MCP server. The responses have ranged from “This is awesome!” to “I have no idea what any of this means”. So, in this article, I hope to explain what this means in plain English and without any assumptions of AI background.

Understanding agents

LLMs, or large language models, take in text (stored as “tokens”, or sub-word chunks) and return text. By itself, an LLM can’t really do anything in the world unless you, the human, are kind enough to blindly copy and paste executable code.

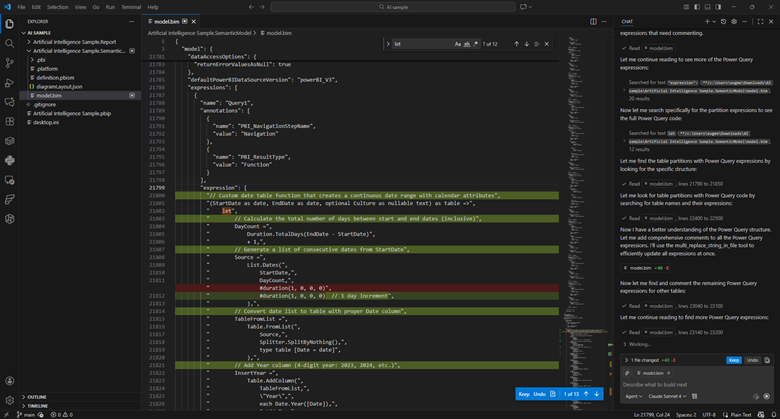

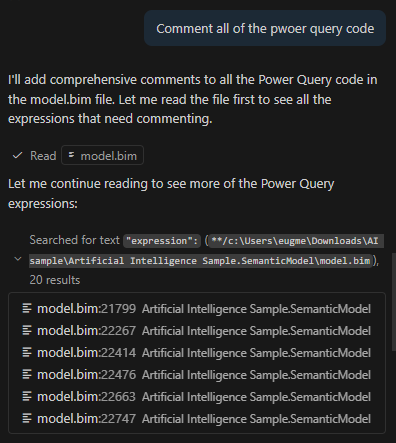

An “agent” is an LLM run in a loop, with access to external tools, aimed at some specific goal. For example, instead of copying and pasting M code to be commented, I can use Claude Sonnet in agent mode and ask it to comment all of the power query code in my model.bim file (see the TMSL file format and the PBIP project format). I can then view and approve those changes in VS code.

The LLM is able to make those changes autonomously because VS Code provides it with tools to search and edit files. Now, I’m still able to approve the changes manually, but some folks will run these tools in “YOLO” (you only live once) mode where everything is just auto-approved.

Suffice it to say, this can be very dangerous.

Managing context

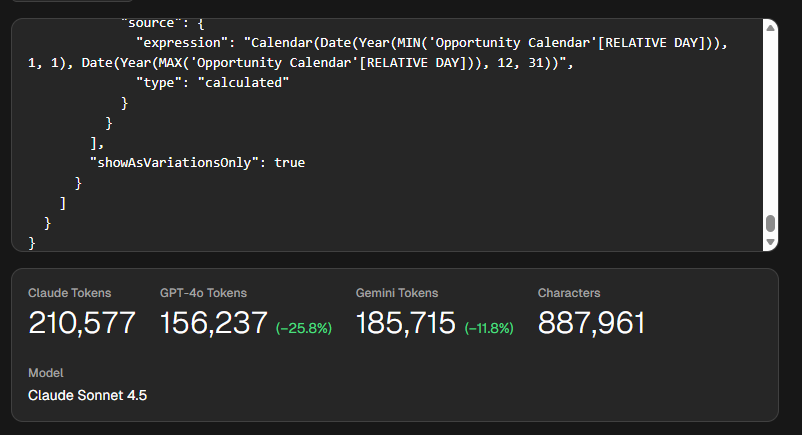

This approach has tradeoffs. Model.bim is a “monolithic” file, so everything is in there. In this example, it’s a 26,538 lines of JSON. This file takes around 210,000 tokens for Claude, which exceeds its default 200k context window. The context window is how much “context” (prompts, chat history, tool descriptions, file contents) it can handle.

Put plainly, this file is too big for Sonnet to reason about in full. Additionally, since you pay per token (both input, output, and “reasoning” tokens), this would be an expensive request. Claude Sonnet 4.5 charges $3 per million tokens, so simply reading the file would cost you 63 cents.

Now, lets say you used Claude’s extended context window, which can go up to 1 million tokens. You still run into an issue called “context rot”. What this means is that the more context you provide the LLM the more likely is to get “confused” and fail at the requested task.

There are two ways to address this. First, is VS Code provides search tools, so the LLM is able to hone in on the relevant parts and limit how much context it receives.

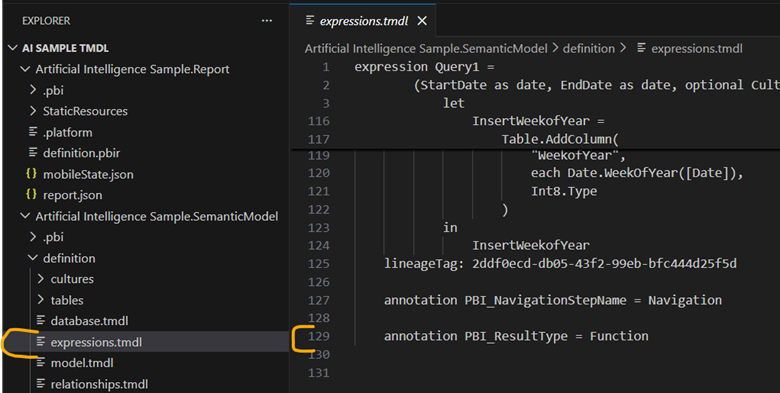

Second, if I were to switch to the TMDL format, I would have a bunch of smaller files instead of one monolithic one. Now all of my relevant Power Query is in an expressions.tmdl file. This file is only 129 lines of TMDL and 1,009 tokens. Much much better. Reading this file would cost you 0.32 cents.

But, what if we want to interact with the model more directly?

Understanding MCP servers

This is where MCP servers like the Power BI modeling MCP server comes in. MCP stands for “Model context protocol”. It is a fairly new protocol for providing LLMs access to external tools, similar to an API. One key difference is MCP is self-discovering.

One of the first commands that MCP servers have to support is list_tools. This means that the API surface area is provided at runtime and is exposed via JSON. APIs, in contrast, tend to be slow moving and will often be versioned.

An MCP server is a piece of software that is run locally or remotely and provides access to three things: tools, resources, and prompts. Tools are simply JSON-based APIs that allow an agent to do something in the world. Resources are data that is provided as if it was a static file. And prompts are sample prompts to help guide the LLM.

The modeling MCP server allows the LLM to not only change DAX in the model, but run DAX queries against the model to self-validate. Does it always do this correctly? No.

So far, I’ve been mildly impressed because the MCP server provides a very long list of tools and Claude Sonnet 4.5 seems to be able to navigate them fairly well. Sometimes it gets it wrong and needs to retry, or sometimes it stops short of the obvious conclusion and needs some guidance. But overall, it seems to work well.

Okay, but is it useful?

I don’t know yet! I’ve only started playing with MCP servers, including this one, a few weeks ago. However, so far I’ve found it really useful for situations where I am parachuted in to a report and have 0 context going into it. Having an agent that can poke around, try things, and report back, is easily able to save me hours of time.

I’ve been told this is a fairly niche use case, and it is. As a consultant this happens to me much more often than someone who works with the same reports on a daily basis. In any case, I think this technology is worth paying attention to because I can see situations where it could save hours of strife.

Right now, here is where I anticipate this tool being the most useful:

- Doing discovery on ugly, poorly documented models.

- Mass commenting code. This requires review and guidance to avoid really dumb comments like adding one for every column with a changed type.

- Bulk renaming.

- Moving DAX code upstream to Power Query, or moving Power Query to SQL.

You’ll notice that nowhere in that list is “create a model from scratch”. I think as time goes on, we’ll find the flashiest demos are the least representative of how people will use tools like these.

If you found this helpful, please let me know. I’m working on “Hands-on LLMs for Power BI developers” course, and I have no idea if this is all hype and if I’m just wasting my time.

Leave a Reply